In this Python tutorial, we will learn about Adam optimizer PyTorch in Python and we will also cover different examples related to adam optimizer. Moreover, we will cover these topics.

- Adam optimizer PyTorch

- Adam optimizer PyTorch example

- Adam optimizer PyTorch code

- Rectified Adam optimizer PyTorch

- Adam optimizer PyTorch learning rate

- Adam optimizer PyTorch scheduler

- Adam optimizer pytorch weight decay

- Adam optimizer PyTorch change learning rate

Table of Contents

Adam optimizer PyTorch

In this section, we will learn about how adam optimizer PyTorch works in Python.

- Before moving forward, we will learn about the PyTorch optimizer. The optimizer is used to decrease the rates of error during training the neural networks.

- Adam optimizers id defined as a process used as a replacement optimizer for gradient descent. It is very efficient with large problems which include lots of data.

- Adam optimizer is one of the most widely used optimizers for training the neural network and is also used for practical purposes.

Syntax:

The following syntax is of adam optimizer which is used to reduce the rate of error.

toch.optim.Adam(params,lr=0.005,betas=(0.9,0.999),eps=1e-08,weight_decay=0,amsgrad=False)The parameters used in this syntax:

- Adam is used as a replacement for the optimizer for gradient descent.

- params: It is used as a parameter that helps in the optimization.

- lr : It is defined as a learning rate helping optimizer.

- betas: It is used as a parameter that calculates the averages of the gradient.

- eps: It is used for improving numerical stability.

- weight_delay: It is used for adding the l2 penality to the loss and the default value of weight delay is 0.

Adam optimizer PyTorch example

In this section, we will learn about the Adam optimizer PyTorch example in Python.

As we know Adam optimizer is used as a replacement optimizer for gradient descent and is it is very efficient with large problems which consist of a large number of data.

Adam optimizer does not need large space it requires less memory space which is very efficient.

Code:

In the following code, we will import some libraries from which we can optimize the adam optimizer values.

- n = 100 is used as number of data points.

- x = torch.randn(n, 1) is used to generate the random numbers.

- t = a * x + b + (torch.randn(n, 1) * error) is used to learn the target value.

- optimizer = optim.Adam(model.parameters(), lr=0.05) is used to making the optimizer.

- loss_fn = nn.MSELoss() is used to defining the loss.

- predictions = model(x) is used to predict the value of model

- loss = loss_fn(predictions, t) is used to calculate the loss.

import torchfrom torch import nnimport torch.optim as optima = 2.4785694b = 7.3256989error = 0.1n = 100 # Datax = torch.randn(n, 1)t = a * x + b + (torch.randn(n, 1) * error)model = nn.Linear(1, 1)optimizer = optim.Adam(model.parameters(), lr=0.05)loss_fn = nn.MSELoss()# Run trainingniter = 10for _ in range(0, niter):optimizer.zero_grad()predictions = model(x)loss = loss_fn(predictions, t)loss.backward()optimizer.step()print("-" * 10)print("learned a = {}".format(list(model.parameters())[0].data[0, 0]))print("learned b = {}".format(list(model.parameters())[1].data[0]))Output:

After running the above code, we get the following output in which we can see that the value of the parameter is printed on the screen.

Read: What is Scikit Learn in Python

Adam optimizer PyTorch code

In this section, we will learn about how to implement adam optimizer PyTorch code in Python.

Adam optimizer PyTorch is used as an optimization technique for gradient descent. It requires minimum memory space or efficiently works with large problems which contain large data.

Code:

In the following code, we will import some libraries from which the optimization technique for gradient descent is done.

- m_dw_corr = self.m_dw/(1-self.beta1**t) is used as a bias correction.

- weight = weight – self.eta*(m_dw_corr/(np.sqrt(v_dw_corr)+self.epsilon)) is used to update the weight biases.

- w_0, b0 = adam.updates(t,weight=w_0, b=b0, dw=dw, db=db) is used to update the weightand bias values.

- print(‘converged after ‘+str(t)+’ iterations’) is used to print iterations on the screen.

import torchimport numpy as numclass AdamOptim(): def __init__(self, eta=0.01, beta1=0.9, beta2=0.999, epsilon=1e-8): self.m_dw, self.v_dw = 0, 0 self.m_db, self.v_db = 0, 0 self.beta1 = beta1 self.beta2 = beta2 self.epsilon = epsilon self.eta = eta def updates(self, t, weight, b, dw, db): ## dw, db are from current minibatch #weights self.m_dw = self.beta1*self.m_dw + (1-self.beta1)*dw self.m_db = self.beta1*self.m_db + (1-self.beta1)*db ## rms beta 2 self.v_dw = self.beta2*self.v_dw + (1-self.beta2)*(dw**2) self.v_db = self.beta2*self.v_db + (1-self.beta2)*(db) m_dw_corr = self.m_dw/(1-self.beta1**t) m_db_corr = self.m_db/(1-self.beta1**t) v_dw_corr = self.v_dw/(1-self.beta2**t) v_db_corr = self.v_db/(1-self.beta2**t) weight = weight - self.eta*(m_dw_corr/(np.sqrt(v_dw_corr)+self.epsilon)) b = b - self.eta*(m_db_corr/(np.sqrt(v_db_corr)+self.epsilon)) return weight, bdef lossfunction(m): return m**2-2*m+1## take derivativedef gradfunction(m): return 2*m-2def checkconvergence(w0, w1): return (w0 == w1)w_0 = 0b0 = 0adam = AdamOptim()t = 1 converged = Falsewhile not converged: dw = gradfunction(w_0) db = gradfunction(b0) w_0_old = w_0 w_0, b0 = adam.updates(t,weight=w_0, b=b0, dw=dw, db=db) if checkconvergence(w_0, w_0_old): print('converged after '+str(t)+' iterations') break else: print('iteration '+str(t)+': weight='+str(w_0)) t+=1Output:

After running the above code, we get the following output in which we can see that the number of iterations with weights are printed on the screen.

Read: Tensorflow in Python

Rectified Adam optimizer PyTorch

In this section, we will learn about Rectified adam optimizer PyTorch in python.

- Rectified Adam optimizer Pytorch is an alternative of the Adam optimizer which looks to tackle the Adam poor convergence problem.

- It is also used to rectify the variation of the adaptive learning rate.

Syntax:

The following syntax is of RAdam optimizer which is used to tackle the poor convergence problem of Adam.

torch.optim.RAdam(params,lr=0.001,betas=(0.9,0.999),eps=1e-08,weight_decay=0)Parameter used in above syntax:

- RAdam: RAdam or we can say that rectified Adam is an alternative of Adam which looks and tackle the poor convergence problem of the Adam.

- params: It is used as a parameter that helps in optimization.

- lr: It is defined as the learning rate.

- betas: It is used as a parameter that calculates the averages of the gradient.

- eps: It is used for improving numerical stability.

- weight_decay: It is used for adding the l2 penalty to the loss and the default value of weight delay is 0.

Read: PyTorch Pretrained Model

Adam optimizer PyTorch learning rate

In this section, we will learn about how Adam optimizer PyTorch learning rate works in python.

Adam optimizer Pytorch Learning rate algorithm is defined as a process that plots correctly for training deep neural networks.

Code:

In the following code, we will import some libraries from which we get the accurate learning rate of the Adam optimizer.

- optimizers = torch.optim.Adam(model.parameters(), lr=100) is used to optimize the learning rate of the model.

- scheduler = torch.optim.lr_scheduler.LambdaLR(optimizers, lr_lambda=lambda1) is used to schedule the optimizer.

- lrs.append(optimizers.param_groups[0][“lr”]) is used to append the the optimizer into paramer group.

- plot.plot(range(10),lrs) is used to plot the graph.

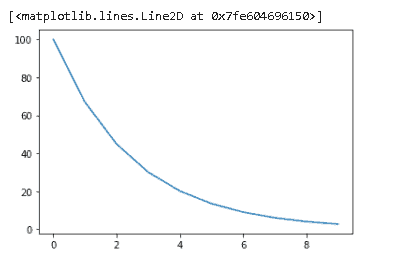

import numpy as num import pandas as pds import torchimport matplotlib.pyplot as plotmodel = torch.nn.Linear(4, 3)optimizers = torch.optim.Adam(model.parameters(), lr=100)lambda1 = lambda epoch: 0.67 ** epochscheduler = torch.optim.lr_scheduler.LambdaLR(optimizers, lr_lambda=lambda1)lrs = []for i in range(10): optimizers.step() lrs.append(optimizers.param_groups[0]["lr"]) scheduler.step()plot.plot(range(10),lrs)Output:

After running the above code, we get the following output in which we can see that the Adam optimizer learning rate is plotted on the screen.

Read: Scikit-learn Vs Tensorflow – Detailed Comparison

Adam optimizer PyTorch scheduler

In this section, we will learn about how to implement Adam optimizer PyTorch scheduler in python.

Adam optimizer PyTorch scheduler is defined as a process that is used to schedule the data in a separate parameter group.

Code:

In the following code, we will import some libraries from which we can schedule the adam optimizer scheduler.

- models = torch.nn.Linear(6, 5) is used to create the single layer feed forward network.

- optimizers = torch.optim.Adam(models.parameters(), lr=100) is used to optimize the model.

- scheduler = torch.optim.lr_scheduler.MultiplicativeLR(optimizers, lr_lambda=lmbda) is used to create the scheduler.

- plot.plot(range(10),lrs) is used to plot the graph.

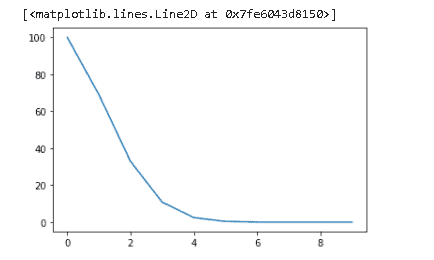

import numpy as numimport pandas as pds import torchimport matplotlib.pyplot as plotmodels = torch.nn.Linear(6, 5)optimizers = torch.optim.Adam(models.parameters(), lr=100)lmbda = lambda epoch: 0.69 ** epochscheduler = torch.optim.lr_scheduler.MultiplicativeLR(optimizers, lr_lambda=lmbda)lrs = []for i in range(10): optimizers.step() lrs.append(optimizers.param_groups[0]["lr"]) scheduler.step()plot.plot(range(10),lrs)Output:

After running the above code, we get the following output in which we can see that the Ada, optimizer Pytorch scheduled is plotted on the screen.

Read: PyTorch nn linear + Examples

Adam optimizer PyTorch weight decay

In this section, we will learn about Adam optimizer PyTorch weight decay in python.

- Adam optimizer PyTorch weight decay is used to define as a process to calculate the loss by simply adding some penalty usually the l2 norm of the weights.

- The weight decay is also defined as adding an l2 regularization term to the loss.

- The PyTorch applied the weight decay to both weight and the bais.

- loss= loss + weightdecayparameter*l2 norm of the weights

syntax:

The following syntax is of the Adam optimizer which is used to reduce the rate of the error and we can also use weight decay which is used to add the l2 regularization term to the loss.

The default value of the weight decay is 0.

toch.optim.Adam(params,lr=0.005,betas=(0.9,0.999),eps=1e-08,weight_decay=0,amsgrad=False)Parameters:

- params: The params function is used as a parameter that helps in optimization.

- betas: It is used to calculate the average of the gradient.

- weight_decay: The weight decay is used to add the l2 regularization to the loss.

Adam optimizer PyTorch change learning rate

In this section, we will learn about Adam optimizer PyTorch change learning rate in python.

Adam optimizer PyTorch change learning s defined as an adjustable learning rate that is mainly used for training deep neural networks.

Code:

In the following code, we will import some libraries from which we can change the learning rate of the adam optimizer.

- optimizers = torch.optim.Adam(model.parameters(), lr=100) is used to optimize the adam optimizer model and also give the learning rate.

- scheduler = torch.optim.lr_scheduler.LambdaLR(optimizers, lr_lambda=lambda1) is used to scheduled the optimizer.

- plot.plot(range(10),lrs) is used to plot the graph on the screen.

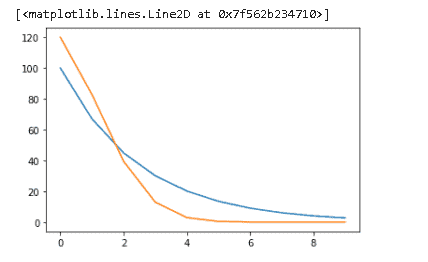

import numpy as num import pandas as pds import torchimport matplotlib.pyplot as plotmodel = torch.nn.Linear(4, 3)optimizers = torch.optim.Adam(model.parameters(), lr=100)lambda1 = lambda epoch: 0.67 ** epochscheduler = torch.optim.lr_scheduler.LambdaLR(optimizers, lr_lambda=lambda1)lrs = []for i in range(10): optimizers.step() lrs.append(optimizers.param_groups[0]["lr"]) scheduler.step()plot.plot(range(10),lrs)optimizers = torch.optim.Adam(models.parameters(), lr=120)lmbda = lambda epoch: 0.69 ** epochscheduler = torch.optim.lr_scheduler.MultiplicativeLR(optimizers, lr_lambda=lmbda)lrs = []for i in range(10): optimizers.step() lrs.append(optimizers.param_groups[0]["lr"]) scheduler.step()plot.plot(range(10),lrs)Output:

After running the above code we get the following output in which we can see that the Adam optimizer change learning rate graph is plotted on the screen.

So, in this tutorial, we discussed Adam optimizing PyTorch and we have also covered different examples related to its implementation. Here is the list of examples that we have covered.

- Adam optimizer PyTorch

- Adam optimizer PyTorch example

- Adam optimizer PyTorch code

- Rectified Adam optimizer PyTorch

- Adam optimizer PyTorch learning rate

- Adam optimizer PyTorch scheduler

- Adam optimizer pytorch weight decay

- Adam optimizer PyTorch change learning rate

Bijay Kumar

I am Bijay Kumar, a Microsoft MVP in SharePoint. Apart from SharePoint, I started working on Python, Machine learning, and artificial intelligence for the last 5 years. During this time I got expertise in various Python libraries also like Tkinter, Pandas, NumPy, Turtle, Django, Matplotlib, Tensorflow, Scipy, Scikit-Learn, etc… for various clients in the United States, Canada, the United Kingdom, Australia, New Zealand, etc. Check out my profile.